GxP Lifeline

The Link Between Regulation, Quality Systems and Data Integrity, Part 2

_715x320.jpg)

EDITOR'S NOTE: This is the second in a two-part series about the relationship of regulation and quality systems when it comes to the consideration of data integrity in the life sciences. Read part 1 of the blog post here.

Principles to Consider When Implementing a Data Integrity Quality System

Beyond the already existing and widely documented elements in regulatory rules, such as the European Union (EU) GMP guidelines (see above), new concepts are emerging. It is necessary to focus slightly more on these concepts and to consider their points when implementing a company’s actual data integrity management policy.

1. Policy

The first innovative point is the concept of taking the issue of data integrity into account at the highest level of the organization. It is necessary to have a policy, or a general policy procedure, that will cover at least the following points:

- The definition of data integrity, as it is understood within the company, as well as its scope, must account for data that is not stored or managed electronically and any data stored using a hybrid system. This does not mean rewriting the regulatory texts, but rather delivering an internal definition to all staff.

- Human resources implement a plan to organize data integrity management. For example, is there a data management committee or a data manager? This includes the organizational and human resources principles for data integrity management, as well as the concepts of individual responsibilities for meeting the challenge of data preservation. The idea behind this is to fall within the category of an open organization, like the recommendations in the PIC/S guide.

- The implementation of management solutions, such as the principles of mapping, the general conditions of risk assessment, as well as the appropriate measures to guarantee that data integrity is maintained. This involves defining the conditions under which data will be used in the organization, how it is mapped, how a risk assessment is completed in its lifecycle, and the measures taken to ensure its sustainability.

- Security must be broadly addressed, covering physical security, logical security and continuous security (these concepts are fully explained ahead). Within logical security it is also important to consider the vitality of the electronic signature. Businesses must also present the methods that make sure that all stakeholders afford the electronic signature similar importance to that of a manual signature as regulations require. This means confirming that employees can use an electronic signature, and have been informed and made aware of its equivalence to a manual signature.

- The organizational methods for performing control reviews, incident tracking and the processes for any CAPAs.

- The conditions related to the modification, transformation or transfer of data are well-defined. These conditions allow for necessary measures for a variety of different operations to be carried out correctly and for the company to demonstrate that there is a rationale behind them. These measures strongly certify that data transfers or modifications have been the subject of a satisfactory validation process, and that they do not alter the initial semantic value.

- The specific constraints related to the implementation of IT solutions and, notably, the requirements that need to be met in the process of implementing new solutions, are there to make sure that data integrity is maintained.

This policy must be approved at the highest level and must be subject to a broad consensus within the organization. It must be sent to staff, whom must also receive general training regarding its requirements. This training must be planned for and accurate records must be kept.

2. Mapping/Risk Assessment

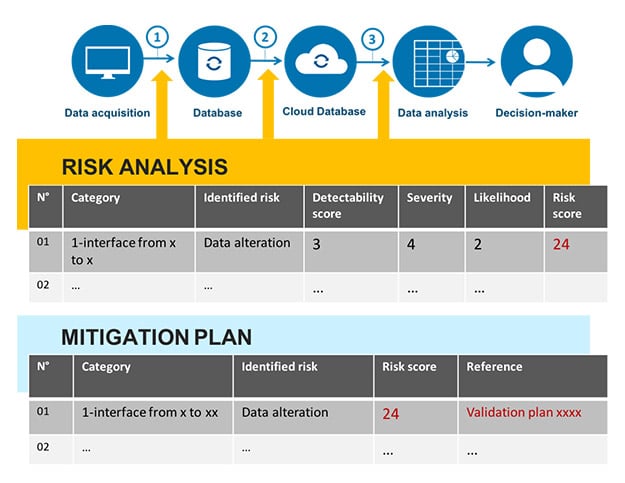

Mapping is a current requirement that has been in place for a long time within the EU GMP guidelines and that has sometimes been confused with validation master plans (VMPs) (5 § 4.3). These two documents can be drafted together. It is, however, interesting to deal with mapping on its own so that it evolves in real time, and not just as and when solutions are implemented. Mapping, which in the past, tended to have a systems-oriented vision, must take data into account in the form of a data flowchart. Here is an example of a data flowchart (fig. 3).

A data flowchart should identify:

- The elements that contribute to data generation;

- The elements used for the storage and protection of raw data, as well as the elements that enable quick and comprehensive data restoration;

- The places where data is transferred, and the steps taken by the business to ensure that there is no alteration of the significance of the data;

- For the points identified as at-risk, the actions put in place to control or mitigate this risk;

- The complete data lifecycle from generation up to archiving or deletion, where appropriate.

The risk assessment must also identify the frequency of the reviews, based on the sensitivity of the data involved.

3. Security

Security principles (5 § 12) must be the subject of ad hoc procedures and are based on three main components:

- Physical security. This is all the methods and measures that ensure that data cannot be altered by physical means. If large data systems are generally well-protected, alterations or risks may often appear in physically-isolated solutions (e.g., standalone solutions that control equipment and where the machine can be easily accessed), and in network devices (e.g., wiring closets) to address physical storage elements. In theory, issues linked to the quality of the power supply, fire and flood risks, risks associated with animals, temperatures and physical access, among others, are considered. For data integrity, it is also worth considering the physical protection of data in paper formats and the protection of access to this data.

- Logical security. This is all the measures that guarantee that access to the systems is fully traceable and is only granted to suitably authorized individuals under a strict registration policy. Access to systems and/or data cannot be anonymous and requires individualized and authorized. Authorization is performed on the basis of a documented profile and following traceable training, which helps verify that the actors are trained in the importance of their actions. Actors in the positions of system administrator or data administrator must be trained and the training must be planned with records kept. In this regard, it is interesting to note the position of the PIC/S document (3 § 9.3) on administrators. It highlights the sensitivity of this function and requires that the administrator be dutifully identified as a person who is not operationally involved in the use of the system in question. According to best practices, an administrator must have a specialized administrative account to perform administrative tasks. This account is not to be confused with the account used to complete other tasks. The administrator login must be traceable and must identify the connected person by name. The availability of information regarding logical security, particularly the quick and easy identification of people with sensitive rights in terms of data integrity (e.g., the right to change statuses), must be quickly and visibly confirmed for the attention of any auditor or inspector.

- Continuity. This includes all the measures that allow a business to activate CAPA should any damage or other events take place, so that, whatever the event may be, there will be no loss of data. Within these operations are the conditions for backup operations, as well as restored operations that can also alter the data irreversibly. These are measures that are integrated into business continuity plans, notably Disaster Recovery Plans (DRPs) – so that employees can return to work after a disaster/emergency plans). This very broad topic is a business issue that is comprised of multiple elements and guarantees, as a last resort, the security of the data.

4. Behavior

The importance given to governance at all levels, and particularly to behavior in data integrity management, was visible within the different guidelines (especially in the PIC/S guide and the MHRA document).

It is easy to imagine the difficulties that exist in all things linked to behavior. The PIC/S guide insists on this and dedicates a whole section to it (§ 6), instructing staff to beware of any temptations or pressures, which could encourage them to falsify data is a necessary element to master. The PIC/S guide states “ … [a]n understanding of how behaviour influences ... the incentive to amend, delete or falsify data and ... the effectiveness of procedural controls designed to ensure data integrity … .”

It is, therefore, important to:

- Consider the implementation of data integrity within an organization as a program directly sponsored by those in the company’s highest positions of responsibility.

- Organize actions for employees to be active participants in data integrity.

- Put appropriate training in place to train staff in the importance and fragility of data as well as key concepts, such as the electronic signature, the traceability of data operations and audit trail reviews.

- Remember to establish means of review, control and correction to make modifications.

The inspectors and auditors need to have a clear expectation that the willingness to do this will be the result of a corporate culture that pledges that fraudulent measures of data alteration cannot occur.

Conclusion

Data integrity is an important process, one which requires an in-depth adjustment of its documentary system and its quality system.

Over the past 20 years, topics related to the validation of computerized systems have been very widely discussed. It is true that with the term “information system,” the balance tilted largely in favor of the system, rather than toward the information. It is then no small accomplishment that the conversation has been re-centered around data, emphasizing not only its importance but also its fragility and potential threats.

Thus, this topic is especially significant considering the recent spurt of high-profile data security breaches at companies considered giants in the life science industry. Even though in most of cases these are not the reasons for the risks incurred by data, the subject is particularly sensitive.

Therefore, the key to mastering data integrity seems to rest on these three pillars:

- Behavior and governance: A matter for the business at all levels, reflecting a culture of quality.

- Handling the domain of a company’s data with a data flowchart and performing risk assessments on the data by integrating the “extended” data concept, meaning signifiers, metadata and audit trails.

- Compliance, particularly in terms of all things linked to security in all its forms, and validation.