GxP Lifeline

The Link Between Regulation, Quality Systems and Data Integrity, Part 1

EDITOR'S NOTE: This is the first in a two-part series about the relationship of regulation and quality systems when it comes to the consideration of data integrity in the life sciences.

The emergence of new guidelines on data integrity, and the interpretation of that data via audits and inspections, doesn't detract from pre-existing elements of regulation, particularly Annex 11 of the European Union’s (EU) good manufacturing practice (GMP) guidelines. This is not, strictly speaking, about new regulations, but rather “... a new approach to the management and control of data ...”. This shift allows aspects described in existing rules to be highlighted, and can serve as a reminder that practices were subject to deviations, whether from incorrect application of the regulation or due to deliberately criminal acts.

If the scope of data integrity is supposed to cover all data (regardless of its nature), we can look at data of an electronic nature and data that is also interpreted and manipulated by information systems.

The innovative nature of international guidelines relating to data integrity also raises awareness of a new way of looking at corporate responsibility. We will show how the concepts can sometimes reiterate points already widely discussed in pre-existing rules, particularly in Annex 11 of the EU GMP guidelines (5), and how certain situations shed light on them. We will also examine the impact of the implementation of data integrity on an existing quality system, and the developments that are necessary to meet data integrity requirements.

But, first and foremost, we will identify aspects of data integrity that include new insights and those aspects of it which are already widely debated.

Principles of Data Integrity

The overall concept is becoming well-established, that looks at whether data, either paper or electronic, meets the five principles known as Attributable, Legible, Contemporaneous, Original and Accurate (ALCOA) (2 - § III 1.) (A), or its extended version (see ALCOA +). A detailed explanation and commentary on each letter is sufficiently documented, so we shall agree instead to identify the new concepts.

Data and Metadata

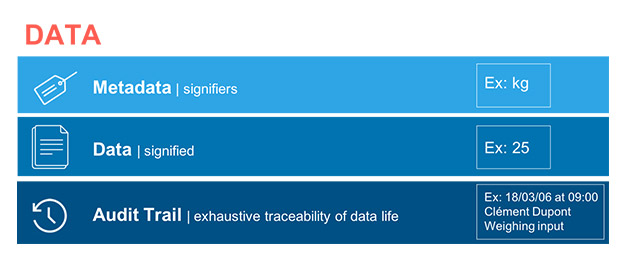

Through the lens of data integrity, the concept of data is becoming more widely understood. Data is not individual and autonomous. All signifier data points depend on other signified data points. For example, a weight measurement is only a figure, but that figure has no real value without including the metadata that identifies its unit. The concept of data is changing. A data point is no longer only a signifier, but the sum of all the data of that it is composed. Depending on the circumstances, this data may also evolve. The historic evolution of this data, as well as its different successive states, is also inseparable. Therefore, we must revise our concept of data to reflect the idea that data is now a combination of the signifier, of the metadata (which consists of signified data) and of the associated audit trail and histories. This combination becomes inseparable and becomes the data. Also, it is understood that, depending on its nature, certain data remains unalterable after its creation due to its medium (we are talking about static data, such as data on paper) while other data can interact with the user (known as dynamic data, such as electronic data) and can eventually be reprocessed for better use.

These three concepts, for which the bond represents a new vision, are well put forward in the guidelines on the document by the U.S. Food and Drug Administration (FDA) (2 – III – 1 – b, c and d); on the document by the U.K.’s Medicines and Healthcare Products Regulatory Agency (MHRA), which more clearly defines static data as a document in paper format and dynamic data as a record in electronic format; (4 – 6.3, 6.7, 6.13) and even on the international Pharmaceutical Inspection Co-operation Scheme (PIC/S) guide (3 – 7.5, 8.11.2). This guidance (see Fig. 1) defines a new unit that then becomes the data.

Governance and Behavior

Another major point is quickly raised when reading the standards — the involvement required at the “highest levels of the organization” 4 - § 3.3, or “Senior management should be accountable” 4 § 6.5) and responsible for data integrity. Management is required to take up this responsibility, alongside the responsibility of ensuring that data integrity training and procedures are carried out. The focus is on employee and manager behavior in data integrity management. The PIC/S guide establishes a form of categorization based on a company’s attitude regarding the behavior of its employees when they face data integrity issues. If the company has an open culture, it means that all employees can challenge hierarchical norms where the best protection of data is concerned. This is in contrast with a business with a closed culture, where it is culturally more difficult to challenge management or to report anomalies. Within an open business, employees know that they can escape any forms of hierarchical pressure that would be contrary to data integrity requirements.

This point is emphasized by the PIC/S document, which dedicates a large section to the notion of data governance, staff training and involvement at the highest level of the business.

It is understood that the authors of these documents expect organizations to commit to action plans at the highest level for a genuine program of data integrity management. Businesses are encouraged to form a company to designate sponsors in the management team; to carry out a genuine implementation project; to train all staff in data integrity awareness and its implications; and to carry out periodic reviews with any corrective and preventative action (CAPA).

Data Media

The concept of combining metadata also implies that of various types of media. Blank forms (2 §. 6) must also be controlled. Where the medium also determines some of the signified data (i.e., qualifying information), this medium is also subject to a monitored cycle. Even if this concept is more specifically interpreted as data in paper format, the fact remains that it also applies to electronic data (data entry forms management) and data in Excel spreadsheet templates (calculation templates often present in laboratories). The PIC/S guide considers this point principally through the lens of paper forms (3 § 8 and the points that follow), but it should be noted that, for filling them in (manually or electronically), these forms often exist in electronic format.

Data Lifecycle

The emergence of the concept of a data lifecycle should also be noted. This idea takes the entire lifecycle of the data into account, from its generation (and its monitoring), its storage, any transformations and its use and its archiving (4 § 6.6 or 3 § 13.7). The data lifecycle conflicts with the relatively archaic concept of the software development lifecycle. This is where the innovative nature of the concept of data integrity can be seen. Data (particularly electronic data) exist on its own and not only through the software that generates and handles it.

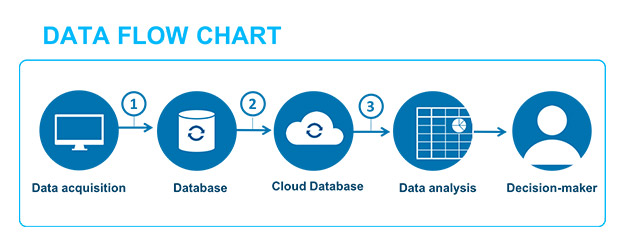

Therefore, the data that was previously only viewed and studied using software, which must have been validated, detaches itself completely from the “system” to become information. The expansion of information technology in business favors more and more of these data transfers. Such data, generated by production or monitoring equipment, is easily transmitted through several types of software and, in the end, plays a part in decisions relating to the safety of the patient or the quality of the product (see example fig. 2).

The data lifecycle concept helps us to understand the necessity of ensuring that the semantic scope of the data used to help make a decision regarding a patient’s health or the quality of the product doesn’t alter the initial value that was generated by the source equipment. Thus, it is important to demonstrate and safeguard that successive transformations of the data do not alter this value, and that the raw source data is always accessible, if necessary.

Data Migration, Data Transfer and Interfaces

A data point, in its broadest form, may be transferred (moved from one IT solution to another) or migrated (re-established in a new IT system via a process called data transfer). It can even be regenerated, if its storage structure becomes obsolete or cannot be preserved. This operation must be subject to an appropriate process that verifies and documents the preservation of the quality of the data. PIC/S considers data transfers part of the data lifecycle (3 § 5.12). For the MHRA (4 § 6.8), it is a fully-fledged approach which must have a “rationale,” or strong validation, to demonstrate data integrity. Very interestingly, the MHRA guidance stresses the risks associated with this type of operation and discusses common mistakes in this area.

Existing Concepts

Without minimizing their importance, there are also concepts that are already widely discussed in European and/or American guidelines:

- Electronic signatures (2 § III – 11; 3 § 9.3 4 § 6.14; 5 § 14), the audit trail (2 § III – 1 c; 3 § 9.4; 4 § 6.13; 5 § 9) in all cases of modifications and actions taken on data;

- The different forms of security including access to data and to systems (see a more detailed discussion of security below) (2 § III 1.e and 1.f; 3 § 9.3; 4 § 6.16, 6.17, 6.18; 5 § 12 and the points that follow);

- Mapping/inventory with risk assessments to identify and manage your systems and your data (3 § 9.21; 4 § 3.4; 5 § 4.3), data flows, data transfers and changes to software at the time, areas of risk with an evaluation of the nature and scope of the risk;

- Periodic reviews and management of incidents with CAPAs, if necessary (3 § 9.2.1 – 4; 4 §6.15; 5 § 11, 13);

- The temporality of data and the immediacy of its creation (the link between data and events) (3 § 8.4; 4 § 5.1).

The second part of this two-part blog post series was published in GxP Lifeline on May 31, 2018 and can be read here.

See what they do at Apsalys: www.apsalys.com